To this end, Antescofo relies on artificial machine listening and a domain specific real-time programing language. In this paper, we present the programing of time and interaction in Antescofo, a real-time system for performance coordination between musicians and computer processes during live music performance. This customization, coupled with the specific type of motion-tracking capabilities of the Leap, make the object an ideal environment for designers of gestural controllers or performance systems.

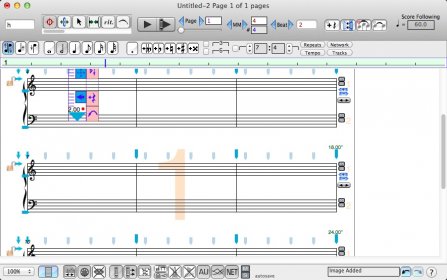

#NOTEABILITYPRO SOFTWARE#

Current third party externals for the device are non-customizable MRLeap is an external, programmed by one of the authors of this paper, that allows for diverse and customizable interfacing between the Leap Motion and Max/MSP, enabling a user of the software to select and apply data streams to any musical, visual, or other parameters. The Leap Motion, released in 2013, allows for high resolution tracking of very fine and specific finger and hand gestures, thus presenting an alternative option for composers, performers, and programmers seeking tracking of finer, more specialized movements. However, these systems lack the ability to track at high resolutions and primarily track larger body motions. Some examples of such systems include camera tracking, Kinect for Xbox, and computer vision algorithms. Several motion-tracking systems have been used in laptop orchestras, alongside acoustic instruments, and for other musically related purposes such as score following. Motion-capture is a popular tool used in musically expressive performance systems. More rigorous empirical testing will allow computational and metacreative systems to become more creative by definition and can be used to demonstrate the impact and novelty of particular approaches.

We argue that creative behavior cannot occur without feedback and reflection by the creative/metacreative system itself. The second type centers around internal evaluation, in which the system is able to reason about its own behavior and generated output. Here we take the stance that understanding human creativity can lend insight to computational approaches, and knowledge of how humans perceive creative systems and their output can be incorporated into artificial agents as feedback to provide a sense of how a creation will impact the audience. The first type of evaluation can be considered external to the creative system and may be employed by the researcher to both better understand the efficacy of their system and its impact and to incorporate feedback into the system. To address the evaluation of each of these aspects, concrete examples of methods and techniques are suggested to help researchers (1) evaluate their systems' creative process and generated artefacts, and test their impact on the perceptual, cognitive, and affective states of the audience, and (2) build mechanisms for reflection into the creative system, including models of human perception and cognition, to endow creative systems with internal evaluative mechanisms to drive self-reflective processes. In order to highlight the need for a varied set of evaluation tools, a distinction is drawn among three types of creative systems: those that are purely generative, those that contain internal or external feedback, and those that are capable of reflection and self-reflection. This article provides theoretical motivation for more systematic evaluation of musical metacreation and computationally creative systems and presents an overview of current methods used to assess human and machine creativity that may be adapted for this purpose. But the claim of creativity is often assessed, subjectively only on the part of the researcher and not objectively at all. #Earthquake M6+ : Mw 6.3 STRAIT OF GIBRALTAR #utm #alertīy Asmawisham | | Media#Earthquake M6+ : Mw 6.The field of computational creativity, including musical metacreation, strives to develop artificial systems that are capable of demonstrating creative behavior or producing creative artefacts. #apple #mīy Asmawisham | | MediaNoteAbilityPro 2.623 – Music notation package. NoteAbilityPro 2.623 – Music notation package.

#NOTEABILITYPRO ANDROID#

Spotify’s video service launches on Android this week #tīy Asmawisham | | MediaSpotify’s video service launches on Android this week #technology Google and Apple Car Projects Receive ‘Vote of Confidence’ from Daimler CEO – Biīy Asmawisham | | MediaGoogle and Apple Car Projects Receive ‘Vote of Confidence’ from Daimler CEO – Bidness ETC #technology NuScale study confirms suitability of SMR design to use MOX fuel īy Asmawisham | | MediaNuScale study confirms suitability of SMR design to use MOX fuel #civil #structure

0 kommentar(er)

0 kommentar(er)